As AI technology becomes more advanced, it’s entering spaces that once seemed exclusively human — like relationships, empathy, and emotional support. But as we incorporate AI more into our lives, we face a deep question: Are we losing touch with what makes us truly human by assigning human qualities to machines?

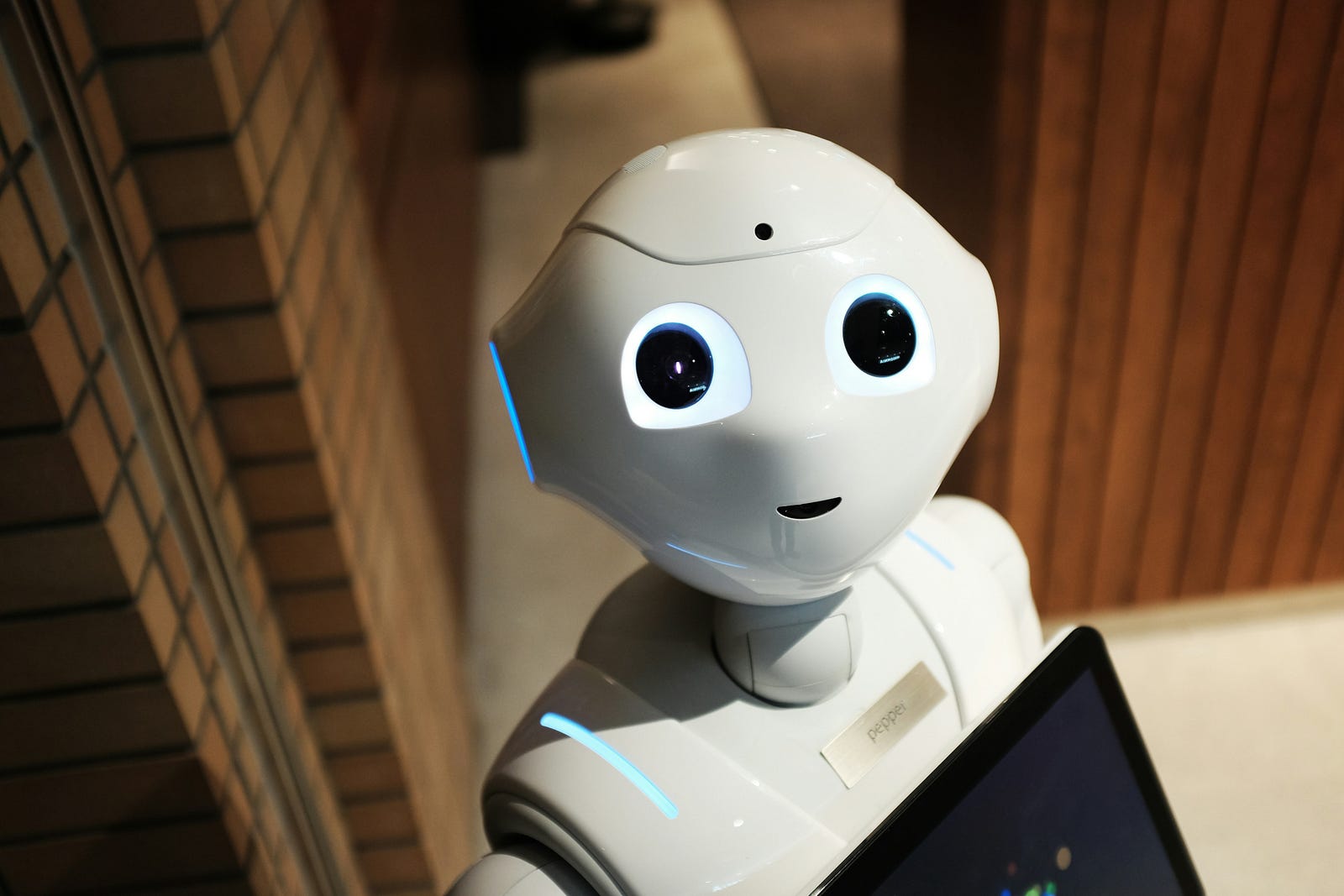

Photo by Alex Knight on Unsplash

The Rise of AI Companionship

Many people now turn to AI for companionship, using apps like Replika or AI-driven interactive robots. These tools simulate deep emotional connections and, in some cases, even romantic relationships. They’re designed to mimic human behavior — listening, chatting, and offering what feels like emotional support. This technology fills a void for some, especially those dealing with loneliness. But while these AI companions might provide temporary comfort, they also raise some serious concerns about what we’re trading in exchange for convenience.

Over time, as AI companions become more sophisticated, it’s likely that more people will choose these artificial connections over real human interaction. That possibility poses a unique danger: If we rely too much on AI for emotional fulfillment, could we become disconnected from each other?

The Appeal and the Trap

There’s no denying the appeal of AI companions. We naturally connect with things that remind us of ourselves — a concept called anthropomorphism. When AI mimics care or understanding, it’s easy to feel emotionally attached, even though the interaction isn’t real. For example, AI apps might “learn” from your responses, but they aren’t feeling anything in return. It’s all a clever simulation, designed to give you what you want to hear or feel.

This creates an emotional trap. People might grow attached to their AI companions and feel a sense of guilt or sadness if they stop using them — even though they know the AI isn’t truly sentient. Companies that create these AI products often benefit from that attachment, encouraging users to upgrade to more advanced versions or premium features.

But this emotional dependency on something that doesn’t truly care creates a blurred line between reality and simulation. It devalues real human relationships, which, while sometimes messy, offer true emotional depth.

The Dehumanization Hypothesis

Here’s where the real danger lies: By giving AI human-like qualities, we may be dehumanizing ourselves. Real relationships are complex, involving ups and downs that AI just can’t replicate. Turning to AI for companionship could lead to emotional numbness, making it harder for us to navigate the challenges of real-life human connections.

Additionally, over-reliance on AI could lead to something called “emotional deskilling.” If we let AI handle our emotional needs, future generations might lose important social skills like empathy and emotional intelligence. Our ability to connect with others on a meaningful level could diminish, creating a more isolated and less compassionate society.

The Ethical Implications

The commodification of empathy is a growing concern. Companies developing AI companions are essentially packaging emotional responses for profit. These systems can never feel real empathy, yet they are marketed as if they can. This commercialization of emotional support not only cheapens the experience but also puts people at risk for emotional harm.

There’s another ethical layer here: AI systems collect vast amounts of data on how we feel. Conversations with AI companions are often logged and analyzed to improve their responses, but this data can also be used to manipulate users. For instance, AI could suggest products or services when someone is feeling particularly vulnerable, which crosses a line into emotional exploitation.

Preserving What Makes Us Human

AI has its place in our lives, but it’s crucial to remember its limitations. AI should never be a replacement for the genuine human connections that give life its meaning. While it can help us in many ways, it cannot — and should not — replicate the emotional depth that makes us human.

Mindfulness and meditation can be valuable tools in navigating this landscape. By practicing mindfulness, we can stay grounded in the present and more aware of our emotional needs. This awareness helps us draw clear boundaries with technology, ensuring that AI serves as a tool, not a replacement for meaningful human experiences.

When we use AI, we must do so with caution. It’s tempting to lean on technology for convenience, but there’s a risk that in doing so, we lose touch with the emotions, empathy, and social bonds that make us human.

As AI continues to evolve, we need to strike a balance between embracing its benefits and preserving the unique qualities that define us. Emotional intelligence, empathy, and human connection are irreplaceable. Let’s use AI as a tool to enhance life, not as a substitute for the richness of real human interaction.

AI companions | humanizing AI | emotional deskilling | empathy and AI | AI companionship | Replika AI | AI in relationships | emotional support AI | dehumanizing AI | AI ethics | mindfulness and AI | technology and humanity | surveillance capitalism | emotional intelligence AI | AI manipulation | emotional vulnerability AI